Well I managed to get out to Lean Agile Manchester last night about Workplace visualisation and catch up with Carl Phillips of ao. We had an excellent burger in the Northern Quarter before heading over to madlab, therefore delaying our arrival – oops sorry guys! We spent quite a bit of time chatting about old times & then got down to the problem at hand.

Weirdly the first example was very reminiscent of where Carl & I started our journey at ao nearly four years ago. Although, some of the key points where slightly different – i.e. number of developers, the location of the constraints in the department.

A rough guide of the scenario:

- Specialists with hand-offs, bottleneck in test, multi-tasking galore

- Small changes and incidents getting in the way of more strategic projects

- 4 devs, 1 QA, 1 BA

We came up with a board similar to this:

We introduced WIP limits around the requirements section and set this at 1 as testing tended to be the bottleneck. Reflecting this morning – introducing the WIP limits might have been a little premature…

As we where happy with the previous board, Ian challenged us to look at the digital agency scenario.

We started to map out the current value stream for the digital agency. A rough guide for the scenario:

- Multitasking on client projects, fixed scope and deadlines,

- lots of rework particularly because of UX

- lots of late changes of requirements

- 3rd party dependencies and a long support handover for client projects

- 4 devs, 1 QA, 1 BA

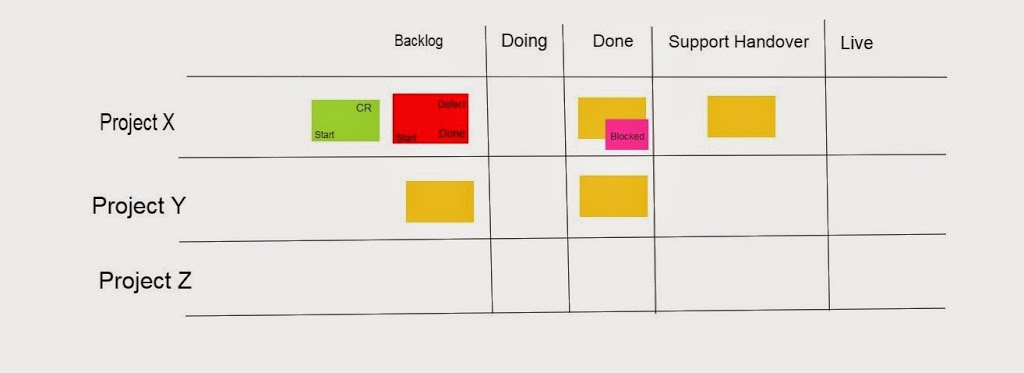

This time we used swim lanes. It looked similar but not exactly like this:

These actually added inherent WIP limits because a swim lane could only have one card in within the column. We introduced different two additional classes of service (we had a “feature” class of service for project work):

- A defect which blocked the current ticket and got tracked across the board.

- CR’s which would track across the board too.

We had a differing opinion on whether the “client support hand over” should be an additional column or a ticket – we ended up going with a column.

What I realised about this second scenario was that we mapped what was currently happening, although we introduced some inherent WIP limits due to board layout, but these where not forced upon the board – i.e. with numbers above the card columns.

Visualisation is good first step on understanding how work flows through a system, but remember to not force concepts on to the current environment. “Start with what you do now”.